There was a day...

Some twelve years back I had a core 2 duo processor based laptop that unfortunately fall and broke the screen. The computer had a total cost of 700 euros when new. When I went to repair the broken screen they ask for a 400 euros repair cost. I immediately knew that I would never, ever use that laptop as a portable computer. I decided to use the VGA connection and use it as a permanent desktop computer. After an year I bought another laptop because, for professional reasons, I needed portability. This was a turning point for this old friend. It was the moment at which it became a server in my personal private infrastructure.

In this 2Gb memory laptop was running

- Apache server

- MySql server

- Php webpages

- IrcD server

- NodeJs based blog

- Email XMail server

- Bind DNS server

- Asterisk VoIP server

- PPP vpn daemon

- A private root CA developed with the OpenSSL toolkit for "secure" connections

- A NFS and SMB file server

And, sadly, this are only the most relevant services. Years had gone by, and I become more and more dependent on these. Well, not on my IRC daemon because as we know nobody chats anymore with IRC (this is not entirely true, there is lots of tech driven communities that use this protocol as the communications backbone, but not as mainstream as some 15 years back).

Few years later

Some two years ago I started to rethink all this snowflake solution and start research some alternatives. In the meantime I had some NFRs (non functional requirements) that I would love were fulfilled. Namely,

- I would like to have redundancy on my system

- I would like to have way more automation

- Ideally my applications should run isolated one from the other

- Ideally I would have control over all layers of the solution. This means control over hardware as well the full customization of the final services

- Would be lovely if it scaled. Better if we could automatically scale some of the services.

- Need to be cheap. I'm not a spoiled child with tons of money to spend, sadly.

These are lots of NFRs. Sure most of them sound completely unrealistic. But hey, if you dream, you better dream big.

At the same time there was an ongoing revolution. The automation religion had awaken. With soldiers and more soldiers fighting for this motto.

Automate everything

The rise of machines

Lots of battles were won by these vast armies and the old kingdom of the darkness snowflake legacy was coming into an end.

With the advances on internet market share of Google, Facebook, Yahoo, Twitter, a new technological philosophy was born. These behemoths of the digital era are very specific beasts with very specific needs. The level at which these organizations operate had risen the level of complexity far beyond the human capabilities and with this new solutions were needed.

The manual setup and intervention on the army of servers was no more manageable. A new theory of relativity was needed to solve the scalability problem.

The solution was to automate everything that was possible.

On the early days of the internet the world was very simple.

- One developer

- One server

- One idea

- One program

It was the time of the one to rule them all

Soon things start to complicate. This once demigods of the digital era were no more enough to fight the more and more complex solutions that they were asked to solve.

Companies started with just one idea but suddenly they incorporate another one, and one more and so on. In a few years the usual was to see several dozen web based applications to be managed by a few of these tech guys.

The fight didn't stop here. The world start to break apart, and with this, the army split into two. On one hand the soldiers that were responsible to create the programs, developers, on the other hand those who were responsible to solve all the operational hazards that come with the deployment and provisioning of dependencies, behold the dev-ops.

For some time we thought that this was enough. But soon even this was not enough. Initial a one man job give place into a team of nerds and then to a set of teams and then a single project was the result of several companies all of them with dozen of teams of brave computer knights.

The dark was rising and rising.

Virtualization

As Gandalf the white...

..was everything we needed. A powerful wizard, full of tricks under his sleeves with a magic wooden stick.

With the rise of complexity (darkness) also risen the sophistication in automation (the powerful stick). The white wizard riding the majestic white horse turns out to be a complex set of tools and technics.

Being the more important of them the concept of virtualization.

With the advent of virtual machines the application development was able to divide in layers the final solution. By abstracting from the application development the hardware and OS provisioning tasks, the developer was able to focus on the juice of building the business oriented applications. Virtualization also enabled the implementation of automation over a large pool of hardware resources, the world was able to breath for a few years.

You know, the idea of is pretty clever one. The problem until now was not the idea, but his building block, the VM.

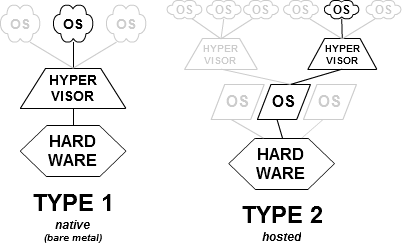

Virtualization is a philosophy that leverages a very powerful mechanism called Hypervisor.

In a nutshell this is how virtualization is implemented. We decouple the Operative System (OS) from the bare metal and instead put in front of it an Hypervisor that is responsible for the talking and, most importantly, for the scheduling. Operative systems were designed to talk with bare metal, not hypervisors. And by doing so OSs aren't aware of the others that may be running concurrently. Additionally this is pretty much the idea because is this that enable one of the biggest features of virtualization, isolation. Turns out that this comes with a price. Having OSs running concurrently over the same hardware we end up with all of them trying to manage it. So we end up with a lot of CPU and memory overhead. One of the main responsibilities of the OS is process scheduling.

So now we end up with a two layer scheduling. Scheduling over OSs and scheduling over processes.

While this is a pretty neat solution for big companies with tons of money to spend in hardware it doesn't fit so well for little guys like me. And even big companies were scaling costs every time they scaled their applications, can we do better?

A new dawn with containers

A few clever guys come with a pretty simple, yet clever idea.

Can we decrease the level of scheduling in virtualization and use just one layer?

What if we achieve isolation not at kernel level but at process level? Hence linux containers. Linux containers are just that. A set of kernel modules, api and tools that enable isolation at process level. We should notice that this level of isolation is not the same nor as secure as it is at the kernel level, but it turns out to be a good compromise. The equivalent of VMs in the traditional model was called container in this new brave world.

Since this set of libraries was too low level, it didn't take long until more high level tools were created to leverage upon these ideas. One of the most popular was docker.

The traditional way of deploying applications vs the container way.

While docker is a pretty awesome tool it is too low level and for virtualization to be useful as a complete company infrastructure framework we needed more.

Kubernets as the new Matrix

So

Kubernets rides bravely upon the white horse of a container box. Turns out that this is not Gandalf and it sucks...

... but it helps. By definition...

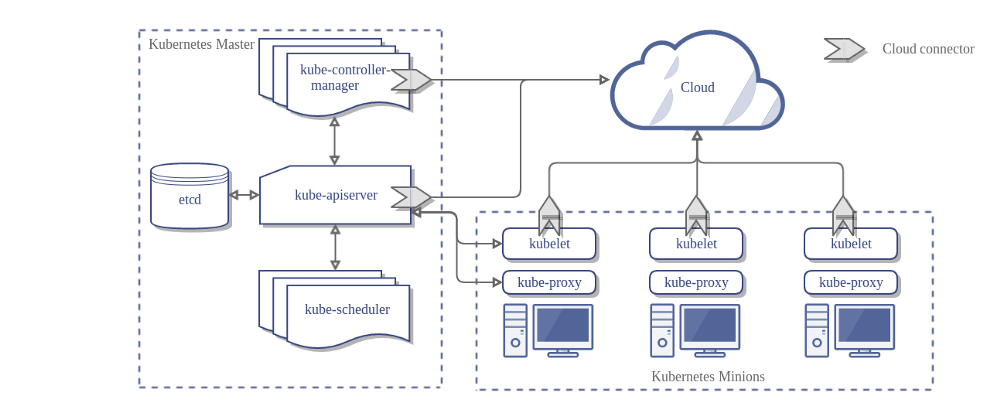

Kubernets is an open-source system for automating deployment, scaling, and management of containerized applications

Yeah, I didn't understood very well at first also. In a nutshell Kubernets leverage all the previous ideas and give you an API to enable you to create all your infrastructure in an automated way by using the power of containers. There is a catch though. Kubernets cluster assumes that you already have all the machines provisioned and working as a Kubernets master or slave node.

To create a Kubernets cluster we need the following steps:

- Install linux kernel in the machine

- Install Kubernets set of tools

- Configure the node to work as master or slave

- Repeat all the process for all the hardware nodes.

This is a tedious work. More, it is pretty error prone. Imagine that you have a 20 node cluster. You would end up with the need to repeat the same steps in those 20 machines. These steps are, in reality, a very high level description of what to do. It turns out that you could spent an entire day to setup properly one machine, with no guarantee that all the missing ones would be set up exactly like the previous.

Seems to me we need, yet another, magical stick to solve this problem.

Fortunately we already have the stick and its called Ansible. This is an automation toolkit that enable us to reproduce the exact same shell commands on a set of N machines by connecting remotely through SSH protocol.

We have the problem pretty much solved right? Well not so fast, I need machines. Remember that I'm a very cheap guy when we are talking about spending money in hardware? Seems to me that I'm in a bit of a trouble.

The army of PI

You don't need to spend lots of money in hardware. With just 300 bucks (euros by the way) I was able to create a 5 node raspberry arm based cluster.

A new dawn

This was an hell of a journey right? This is only the beginning. In next posts I'll dedicate more detail on all the process, the roadblocks and some strategies used to be able to successfully migrate my snowflake into a automated and versioned developing laboratory.

Remember all the premises I had written in the beginning of the post?

Turns out that we just enforce them. In the next posts we will see in more detail how we did it and what we can gain with a setup like this.

For the curious you can have a peek over the source code here