The need

Virtualization is pretty much everywhere. Have you ever run a Java program? Run a windows game on linux using the Wine windows emulator? Personally I used to play the old Streets of Rage game which was built for Sega Genesis. What all this technologies have in common? They all use virtualization technology. By wikipedia definition

In computing, virtualization refers to the act of creating a virtual (rather than actual) version of something, including virtual computer hardware platforms, operating systems, storage devices, and computer network resources.

Today we will dive into the virtualization of operating systems. For developers the concept and tools of virtualization are a key feature because the development depends a lot on it. When you program an android application you could do the following steps.

- Make code

- Compile the code

- Deploy the code to the device

- Verify its working

- Goto 1

What is the problem with this? Well bet you figure it out. It is a pain in the ass, right? Most of the time you just take some seconds to do a quick fix but with this setup you would take a lot of more time to verify it worked as expected in the device. Well and that's not the only problem. In the case of Android development you've got a bunch of handheld devices. So in fact step 3 and 4 would need to be done n * 2 times, being n the number of devices you want to test you compliance. So you would need to spend a lot of money buying devices and also a lot of time testing on all of them. Imagine the work of solving a bug on a method...

On server-side development

When your work is basically develop web based applications you need to program against a server. In the beginning of time the server was a pretty real machine, being the most common case an old one that you could not run new games. The traditional process would be to develop on that same machine, setting up the machine as a server and then begin the cycle of develop and test locally. A few days later you would end up with an app running on your old pc that was right next to your bed, am I right Mark.

At this point in time I bet you start finding this methodology a bit undesirable. First you would end up polluting with development libraries your production machine (which is the name we give for the servers that are being used by the end user). Then you got the problem that when you turn off the pc, maybe because you install a new tool to test or something else, the machine as a server would be down and in this way the potentially users would end with an internet connection error. So it is pretty much straight forward to understand why develop in the same machine you want to run the application is not a proper way of doing server side development.

Another necessity when you create a application is that you want one instance to test, another to let users use. So in this case you would need two instances. But this is the simpler case, in which you are the only developer, but today developer teams are in the hundreds and the software is a set of dozens of applications running concurrently and with a chaotic mesh of dependencies, restraints and with a dynamic need of computer resources. So how do they do it?

The answer is a very long one, and in the most of the cases is a on going process but in all virtualization is present. Today teams develop locally and deploy their code to a computational farm that consists on a number m of operating system instances distributed transparently against a number n of physical machines.

In big teams the developer don't need to deal with the virtualization because usually there are in house teams responsible for the management of all this infrastructure complexity, nevertheless the complexity don't disappear and the developer is still dependent on the existing infrastructure to deploy and test their code.

VirtualBox and Vagrant

With the need comes the solution. In this case the solution start, in most of the cases, by being a VirtualBox instance running one or more virtual operating systems, we call it vms which stands for virtual machines, and in that vms the developer setup all the running environment which basically consisted in the configuration of the web server and other tools and then it would develop in his host machines and deploy on the vms that were configured.

This was a start, it was used for several years and for small projects it still is used. It has the advantage of not polluting the environment in which the server application is running with tools and libraries needed for the development process, and vice-versa.

VirtualBox is a good tool but it is a GUI interface and the lifecycle and management of vms would ended up being all to manual. So new tools arrived, being one of them the Vagrant. Vagrant is a very cool tool because it allows you to automate many of the tasks associated with the use of virtual machines. It allows you to easly donwload a specific operating system distribution and setup and start and stop easily, and most important it is shell based which means that is more automatable. You've got here an example of a virtualization using vagrant

Despite of being an awesome tool, vagrant still lacks features in terms of orchestration of machines. Vagrant is a great tool to quickly start and stop virtual machines for server applications. Since it don't need a gui interface you can deploy and run virtual machines easily and create scripts to automate a environment of several machines with services running by exploiting the features you can employ with Vagrant file. However, as was said, Vagrant still lacks the capability to scale. If you have a virtual machine setup and need another instance running that would represent another virtual machine instance without any connection between the first, any change you need to do in the first it also needed to be replicated to the second. Now imagine this problem with 100 server instances. Well that is just not good enough. The problem is that the virtual machine state and the instance state are the same.

Time for Docker

The solution comes with Docker. Docker is a tool that exploits several linux kernel features to enforce the separation of the virtual machine state and the state of the instance running the image that represents that virtual machine. By doing this separation Docker enable other cool features such as versioning and build more specific images based on other docker image setups.

Here you got example of a Docker image setup that was created to decorate a base centos distribution with a set of tools, in this case java, git and maven

FROM centos

MAINTAINER Balhau <balhau@balhau.net>

#Install netcat and wget utility

RUN yum install nmap-ncat \

--assumeyes && yum install wget --assumeyes

#Install java

RUN cd /opt/ \

&& wget --no-cookies --no-check-certificate \

--header \

"Cookie: gpw_e24=http%3A%2F%2Fwww.oracle.com%2F;\

oraclelicense=accept-securebackup-cookie"\

"http://download.oracle.com/otn-pub/java/jdk/8u60-b27/jdk-8u60-linux-x64.tar.gz" \

&& tar xzf jdk-8u60-linux-x64.tar.gz \

&& rm -rf jdk-8u60-linux-x64.tar.gz \

&& cd /opt/jdk1.8.0_60/ \

&& alternatives --install /usr/bin/java java /opt/jdk1.8.0_60/bin/java 2 \

&& alternatives --install /usr/bin/jar jar /opt/jdk1.8.0_60/bin/jar 2 \

&& alternatives --install /usr/bin/javac javac /opt/jdk1.8.0_60/bin/javac 2 \

&& alternatives --set jar /opt/jdk1.8.0_60/bin/jar \

&& alternatives --set javac /opt/jdk1.8.0_60/bin/javac

#Install git

RUN yum install deltarpm --assumeyes \

&& yum groupinstall "Development Tools" --assumeyes \

&& yum install gettext-devel openssl-devel perl-CPAN\

perl-devel zlib-devel --assumeyes\

RUN cd /opt/ && wget\

https://github.com/git/git/archive/v2.6.0.zip -O git.zip

RUN cd /opt/ \

&& unzip git \

&& rm -rf git.zip \

&& cd git-* \

&& make configure \

&& ./configure --prefix=/usr/local \

&& make install

#Install maven

RUN cd /opt && wget\

http://mirror.cc.columbia.edu/\

pub/software/apache/maven/maven-3/\

3.0.5/binaries/apache-maven-3.0.5-bin.tar.gz \

&& tar xzf apache-maven-3.0.5-bin.tar.gz -C /opt\

&& rm apache-maven-3.0.5-bin.tar.gz \

&& cd /usr/local \

&& ln -s /opt/apache-maven-3.0.5 maven

ENV M2_HOME /usr/local/maven

ENV PATH ${M2_HOME}/bin:${PATH}

The above code is pretty straightforward. The first instruction

FROM centos

just tells docker to pull the image identified by centos from the docker images repository that you can find here.

The second instruction is basically information about the author of the Dockerfile. The lines that start with the RUN keyword are simple shell commands that will be executed in the image right after the centos pull is finished. The ENV

ENV M2_HOME /usr/local/maven

ENV PATH ${M2_HOME}/bin:${PATH}

instructions define environment variables in the virtual machine.

In this shell example you can see how to build the image described and how to run a shell to confirm that all the features were installed and are, indeed, accessible.

With Docker the work of creating a machine is a lot easier than with Vagrant with the advantage of being able to run it within Linux Containers which are much less cpu intensive than full virtual machines.

So far so good but Docker raises the level of abstraction all this work by allowing us to manage the orchestration of services and a way to transparently scale the instances we build running them in a isolated user space. In the following example we will see how this is done.

After building manually the balhau/centos image we will create a service, which in reality is another image, start the service, scale up and down the number of instances.

So without further addo lets see the Dockerfile of the service

FROM balhau/centos

MAINTAINER Balhau <balhau@balhau.net>

#Download the last version of the project

RUN mkdir /var/app && mkdir /var/app/webdata

ADD start-server.sh /var/app/webdata/

RUN chmod +x /var/app/webdata/start-server.sh

RUN cd /var/app/webdata \

&& wget\

"http://nexus.balhau.net/nexus\

/service/local/artifact/maven/redirect?\

r=snapshots&g=org.pt.pub.data&a=webpt-ws&v=LATEST"\

-O web-pt.jar

ENTRYPOINT /var/app/webdata/start-server.sh

Basically this will fetch a web application from a remote nexus server, it will add the script to start the service and then add an entrypoint of the service which is basically an instruction that is to be run as soon as the instance runs.

To build the webpt service we will use a docker-compose.yml

webpt:

build: ./webdata

ports:

- "8080"

and use this file to manage the service. To start the service we need first to build the image

sudo docker-compose build

and then start the service

sudo docker-compose up

You can also scale up the application number of instances or scale down

sudo docker-compose scale webpt=5

sudo docker-compose scale webpt=1

You can see here a shell example.

Unleash the power on a sea of machines

Until now we only exploit Docker in a single machine and we must say that it is just fine for development purposes. However for a practical approach of distributed computing we need to take in account that we will need to scale our work among a pool of resources. Docker while good for the managing of the virtual machines as nothing to say regarding of the management of instances across a set of physical hardware. Its time for another layer abstraction, in this case a physical layer abstraction. And here we got mesos for the rescue. The official description of mesos go like this

Apache Mesos abstracts CPU, memory, storage, and other compute resources away from machines (physical or virtual), enabling fault-tolerant and elastic distributed systems to easily be built and run effectively

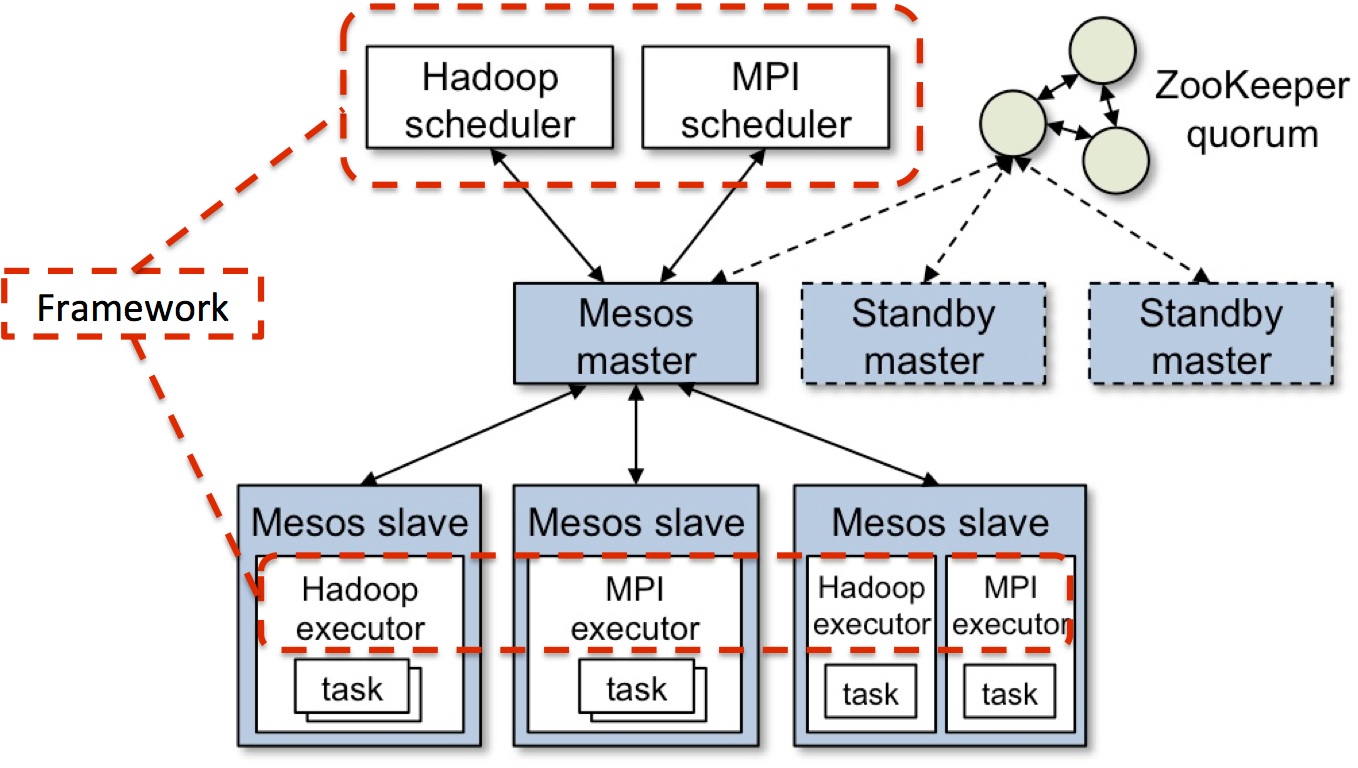

Mesos has the following arquitecture

and the best news is the fact that docker is a component that can be integrated with docker so in this case we can apply the elasticity of mesos to the lightweight virtualization of Docker. With this new layer of abstraction we achieve to a distributed way of deploying and managing our services